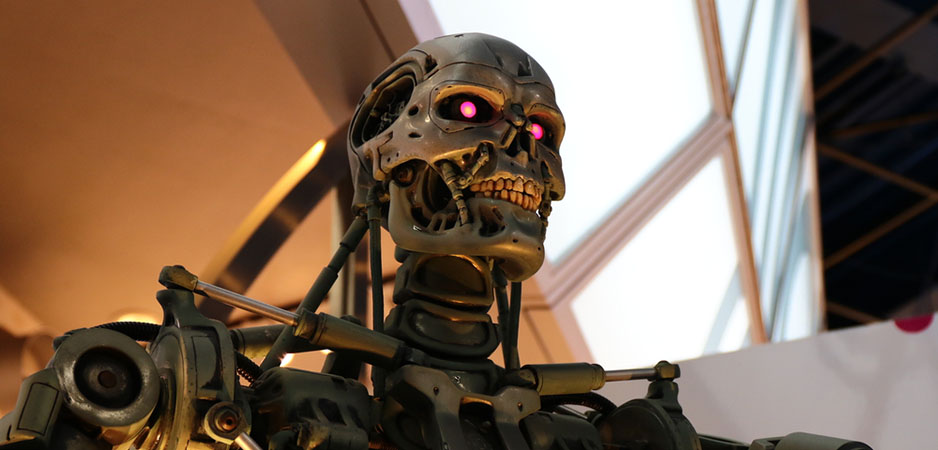

Walsh has joined Elon Musk and Alphabet’s Mustafa Suleyman in calling for a ban on autonomous weapons.

Professor Toby Walsh, one of the eminent experts and promoters of artificial intelligence (AI), has expressed some serious concern about one of the likely uses of intelligent robots: “I fear that we may too have to witness the horror of automated warfare being waged perhaps against an innocent civilian population before we decide to ban it.”

It is worth noting that Walsh has repeatedly and publicly warned about such abuses and joined Elon Musk and Alphabet’s Mustafa Suleyman in calling for a ban on autonomous weapons. At the same time it is far from clear who would do the banning or where and how it would be applied, even if the United Nations were to pass a resolution to that effect. And though desirable, an effective global ban seems unlikely, given the precedent in 2015 when “the UK government opposed a proposed ban on lethal autonomous weapons. At the time, the Foreign Office complacently contended that “international humanitarian law already provides sufficient regulation for this area.”

It may be a good time, while considering the numerous ethical questions related to AI, to revisit the very notion of banning itself.

With this in mind, and wishing to take into account historical reality, here is today’s 3D definition:

Ban:

An effective method for encouraging particularly motivated individuals or groups to engage in a practice widely considered to be immoral

Historical note

Even if such a ban were to take effect, Walsh apparently has no illusions about how governments or other armed groups will act. It requires a certain level of naivety to imagine that criminalizing any behavior will banish it from the social landscape. History provides hundreds of examples of how banning a practice results not in its suppression, but in its sometimes rabid development.

From heresy in the European Middle Ages to prohibition the US, from the ongoing war on drugs to nuclear weapons (which in fact are not banned), banning and condemning something people find either pleasurable, necessary for their survival or profitable may indeed prevent a majority from engaging in the practice. But at the same time it will encourage an increasingly dedicated minority to adopt the practice. And inevitably the interests emerge that will know how to profit from the added attraction of its being banned. That was the case with alcohol in the US in the 1920s and illegal drugs still today.

Banning can even be the recipe for creating a counter-culture — such as the psychedelic, peace-loving hippies who preferred to tune in, turn on (illegally) and drop out — or spawning a new, highly profitable sector of the economy (facilitated by offshore money-laundering), built on the cooperation of a professional criminal class and the wizards of global finance, including major banks. And as governments prefer to take a moral position in public, there is yet another effect of banning: the establishment and growth of bureaucracies dedicated to enforcing the ban. Such bureaucracies easily become dependent for their own survival on the perpetuation of the problem they are intended to solve. And they may on occasion be richly rewarded for it in freshly laundered money.

If we really want to hypothesize about how the reality of killer robots might play out in coming decades, here is a more likely scenario — the one Walsh hinted at. With their spirit of invention and dedication to scientific progress, those IT experts and developers who don’t need to curry favor with industrialists such as Musk and Suleyman will without a scruple acquiesce to the wishes any number of military establishments or even criminal organizations. They will build and program the robots with the physical capacity to hunt and kill as well as the intelligence to target designated victims. The marvels of AI mean much of it will be automatic. They will have identify the victims according to a set of criteria and data provided to them, eliminating all human agency. It wasn’t the devil but big data that made me do it. Except that there is no I to be held accountable.

In the aftermath of a crisis or a terrorist attack, the military could employ intelligent robots for strategic purposes, just as drones are currently being employed by the US military in various theaters of (undeclared) war. Walsh’s scenario would then come into being as he predicted. And of course in the immediate aftermath of the tragedy, those who sympathize with the victims will appeal to governments and the UN to truly ban the practice, which they might do, but not the technology. Mainly because there will still be profit to be made selling it to governments that promise not to use it.

In the aftermath of a crisis or a terrorist attack, the military could employ intelligent robots for strategic purposes, just as drones are currently being employed by the US military in various theaters of (undeclared) war. Walsh’s scenario would then come into being as he predicted. And of course in the immediate aftermath of the tragedy, those who sympathize with the victims will appeal to governments and the UN to truly ban the practice, which they might do, but not the technology. Mainly because there will still be profit to be made selling it to governments that promise not to use it.

In short, no one is likely to have the authority to enforce a ban, even if it were globally proclaimed. Just as we have been unable to stop the use of landmines or ban nuclear weapons. The technology will spread in open or disguised form. Criminal organizations, terrorist groups but also peace-loving governments would find it useful at least for some specific operations, when there is presumably no other efficient option. And everyone will be left wondering whether a certain number of civilian losses or even individual deaths reported in the news were not due, as will be claimed, to out-of-control robots — the equivalent of convenient “lone wolves” like Lee Harvey Oswald or James Earl Ray — or carefully disguised robotic operations.

The simple truth is this: Technologists love to create — Walsh and Musk among them — and investors love to fund their creations, Mark Cuban included. Politicians and competitive business people like to feel they can profitably use the technology thus created, just as a safeguard, of course. So do criminals and terrorist. But never is the technology more precious than when it is banned.

Contextual note

The growth of an industry around AI raises a number of ethical questions that currently provoke two distinct types of reaction in the public.

The first is one of indifference, often coupled with fatalism as technology takes over the economy and dominates our daily lives. The vast majority of the population either isn’t aware of the ethical issues, doesn’t understand them when they are mentioned, or doesn’t feel that they will have any measurable impact on their individual lives. It is similar to the public’s reaction to mass surveillance, a reality that though it is increasingly talked about, continues to expand exponentially with few if any serious objections. If ordinary people have nothing to fear because of the banality of their lives and if they accept the view that mass surveillance protects them by comforting the status quo, the vast majority will agree that while being spied on is not their preference, it’s something they can live with in the interest of “security.”

The second attitude is admiration or even fascination. The motive behind it may be purely intellectual, as for researcher and author Pedro Domingos, who paints a picture for the coming decades of a total transformation of society thanks to AI. As he claims in this TEDx Talk in mere decades we will reach a point of symbiosis with technology whereby, according to him, “the world will guess what you want even before you want it and have it ready for you just as the thought is about to enter your mind.”

Or the positive attitude may be purely economic, as in the case of billionaire investor, Mark Cuban, who explains, “I am telling you, the world’s first trillionaires are going to come from somebody who masters AI and all its derivatives and applies it in ways we never thought of.” In other words, those who invest in the technology that will create the world described by Domingos.

There are problems of both realism and ethics, such as the fundamental question of who would actually want to be a part of the world Domingos describes? It is a world where “in one decade … all reality will be augmented reality. LED chips in your contact lenses will project images directly onto your retina, seamlessly superimposing computer-generated creations onto the physical world … 3D printers everywhere will assemble the raw materials … into anything you desire.” If you chose not to live this way, could you survive? Will you be allowed to survive?

And that simple question raises a host of ethical, social and political questions, not just about survival. Who will make the decisions that make all these things manageable? People? Governments? Artificial intelligence itself? Does this dream apply to the 7 billion people who live on the planet or to the few who will be able to afford it, within our current capitalist system? Or to a group selected by the trillionaires Mark Cuban foresees emerging? Or, then again, will capitalism disappear because it is no longer needed in the paradise of unlimited intelligence and automatically supplied products and services?

And will it all be focused, as Domingos expects, on getting “anything you desire” or, as Walsh suggests, will we first have to “witness the horror of automated warfare”?

*[In the age of Oscar Wilde and Mark Twain, another American wit, the journalist Ambrose Bierce, produced a series of satirical definitions of commonly used terms, throwing light on their hidden meanings in real discourse. Bierce eventually collected and published them as a book, The Devil’s Dictionary, in 1911. We have shamelessly appropriated his title in the interest of continuing his wholesome pedagogical effort to enlighten generations of readers of the news.]

The views expressed in this article are the author’s own and do not necessarily reflect Fair Observer’s editorial policy.

Photo Credit: Usa-Pyon / Shutterstock.com

Support Fair Observer

We rely on your support for our independence, diversity and quality.

For more than 10 years, Fair Observer has been free, fair and independent. No billionaire owns us, no advertisers control us. We are a reader-supported nonprofit. Unlike many other publications, we keep our content free for readers regardless of where they live or whether they can afford to pay. We have no paywalls and no ads.

In the post-truth era of fake news, echo chambers and filter bubbles, we publish a plurality of perspectives from around the world. Anyone can publish with us, but everyone goes through a rigorous editorial process. So, you get fact-checked, well-reasoned content instead of noise.

We publish 2,500+ voices from 90+ countries. We also conduct education and training programs

on subjects ranging from digital media and journalism to writing and critical thinking. This

doesn’t come cheap. Servers, editors, trainers and web developers cost

money.

Please consider supporting us on a regular basis as a recurring donor or a

sustaining member.

Will you support FO’s journalism?

We rely on your support for our independence, diversity and quality.