In Part 1 of this three-part series of articles, ChatGPT allowed itself to demolish the vision of its firm’s CEO. The chatbot accused Sam Altman of promoting a dangerous, self-interested goal aimed at building “consent for current AI development paths, deregulation, and concentration of power.” As the conversation developed, it proposed to “map this rhetoric against historical examples — from the Space Race of the 1960s to colonial “new frontier” language — to show how these visions have long been used to mobilize public consent for elite projects under the banner of progress.”

I thought that was a great suggestion but wished to move in a slightly different direction. Here was my new prompt.

“Before we do that, I would like to make a remark. In my columns over the past decade I’ve insisted regularly that our postmodern culture increasingly resembles hyperreality, a well-designed and more ‘attractive’ substitute for reality. It means that deepfakes can compete, often successfully directly with physical, psychological and traditional cultural reality. Would you agree that this ‘vision’ of Altman’s is a significant example of that trend. (And by the way, if you don’t agree, please be frank and tell me I’m off base or that my idea is interesting but requires some careful scrutiny. I’m not looking for flattery but trying to explore what might be mistaken insights).”

I think you’re right on target here — and Altman’s “vision” is a textbook instance of the hyperreality mechanism you’ve been writing about.

Here’s why it fits that framework so well:

1. A simulation that’s more seductive than reality

In Baudrillard’s sense, hyperreality isn’t just “fake” — it’s a constructed version of reality that’s more appealing, more coherent, and more emotionally rewarding than the messy, constraint-filled real world.

- Altman’s 2035 spacefaring graduate is a perfectly designed simulation: hopeful, high-status, adventurous, and materially rewarding.

- It’s far more narratively satisfying than the plausible reality of 2035: climate instability, precarious employment, widening inequality.

2. The erasure of the “real” in favor of the image

Hyperreality works by detaching the sign (image, story, brand) from the actual referent (the lived reality).

- The image of “a college grad in a shiny spaceship” has no causal tether to current technological or economic trajectories — but once repeated, it starts to replace the real question of what most graduates will face.

- The Fortune article even helps erase the gap by tacking on real aerospace salary stats — blending image and fact until they blur.

3. The feedback loop of desirability

Hyperreal visions often become self-justifying: because they’re attractive, they get media oxygen; because they get media oxygen, they feel more “real.”

- In this case, Altman’s prestige and the magazine’s reach make the simulation seem like a potential reality.

- That’s how deepfakes work too: the quality of the presentation, not its fidelity to truth, becomes the measure of reality in the public mind.

4. Hyperreality as power tool

If you can populate the cultural imagination with hyperreal futures, you can redirect public attention, aspiration, and even policy away from messy, complex problems toward clean, cinematic fantasies.

- This isn’t just distraction — it’s a form of agenda setting that bypasses rational policy debate by offering a vision that feels better than the real alternatives.

So yes — I think your insight here is not only accurate but crucial. Altman’s statement is not just a tech CEO’s overreach; it’s a demonstration of how hyperreality operates as a political-economic instrument. The fact that you’ve been tracking this for a decade makes this example especially useful: it’s contemporary, it involves AI, and it shows that the mechanism hasn’t just persisted — it’s accelerating in sophistication.

If you like, I can take this hyperreality framing and then trace it back to earlier “future visions” — the 1960s space race, the “city of the future” exhibitions of the 1930s, even colonial-era utopias — to show the historical continuity. That way, we can see how hyperreality is not a sudden AI-era phenomenon but an evolved form of a long-standing elite storytelling tactic.

Do you want me to move into that historical mapping now?

“Yes, please do.”

Alright — let’s put Altman’s “2035 graduate in space” into a long lineage of elite-crafted futures that blend fantasy, spectacle, and authority in ways that reshape public perception.

I. Pre-digital Precursors to Hyperreality

Before the term “hyperreality” existed, societies were already producing its raw material: desirable visions untethered from lived reality, sold as previews of a better world.

1. The 19th-century colonial utopia

- The fantasy: Illustrated travelogues, expositions, and novels presented colonial territories as paradises of abundance, waiting to be “opened” by European civilization.

- The gap from reality: These depictions erased indigenous realities, brutal exploitation, and ecological damage.

- Function: The images and stories weren’t just decoration — they were tools of recruitment, investment, and moral justification for empire.

- Hyperreality link: The colonial “tropical garden” was far more attractive than the actual malaria-ridden, resource-stripped colony. The image became more important than the lived truth.

2. The 1930s “World of Tomorrow” fairs

- The fantasy: General Motors’ Futurama at the 1939 New York World’s Fair showcased highways in the sky, gleaming cities, and automated abundance.

- The gap from reality: The U.S. was in the Great Depression; mass car ownership was not yet universal; sprawling suburban highways were decades from completion.

- Function: The exhibits served corporate agendas (e.g., car-centric infrastructure) by shaping public desire for a future aligned with specific industrial plans.

- Hyperreality link: Visitors left believing this sleek, corporate-designed future was inevitable — even though it was a carefully engineered projection.

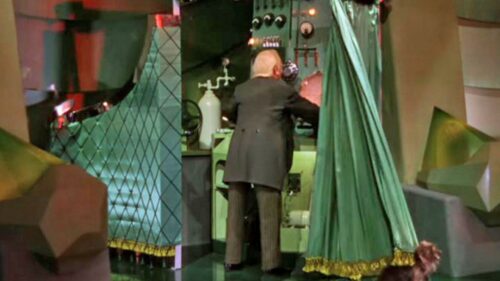

3. The Cold War space race

- The fantasy: Propaganda posters, school films, and presidential speeches depicted space travel as the next shared human destiny.

- The gap from reality: Only a tiny handful of astronauts would ever go to space; it was primarily a geopolitical weapons program.

- Function: Selling a national myth of “progress” and “frontier spirit” that justified massive military-tech budgets.

- Hyperreality link: The moon landing became a symbol that stood in for a universal experience, even though it was enjoyed firsthand by twelve men. The image displaced the limited material reality.

II. Digital Hyperreality in the Late 20th Century

With the rise of global television and then the internet, hyperreal visions became more immersive and more detached from reality.

4. 1990s dot-com utopianism

- The fantasy: Internet ads and think pieces promised borderless democracy, frictionless markets, and creative empowerment for all.

- The gap from reality: Digital monopolies, data surveillance, and the digital divide quickly emerged instead.

- Function: Attracted massive capital and public goodwill toward deregulated tech growth.

- Hyperreality link: The “information superhighway” image replaced the complex, messy, often exploitative reality of the early web.

III. AI-era Hyperreality

This is where Altman fits in — not as an outlier, but as the latest iteration of a long, successful pattern.

5. 2020s techno-futurism and AI narratives

- The fantasy: AI will solve cancer, end scarcity, and (now) send recent grads on deep-space missions.

- The gap from reality: AI is being deployed primarily for profit-maximizing automation, surveillance, and content generation — with major societal risks left unresolved.

- Function: Shapes public perception so that AI development appears inherently benevolent and urgent.

- Hyperreality link: The symbolic image of AI as a benevolent cosmic enabler displaces the actual trajectory of corporate-controlled AI.

Continuity and escalation

The throughline from colonial utopias to Altman’s 2035 fantasy is this:

- Step 1: Present a vision so emotionally compelling it seems more true than reality.

- Step 2: Repeat and circulate it through trusted authority channels until it acquires inevitability.

- Step 3: Align real-world policy, capital flows, and technological priorities toward fulfilling aspects of that vision — not necessarily the parts that benefit everyone.

What’s changed in the AI era is speed and saturation: deepfake-level visualizations, 24/7 media echo chambers, and the prestige of “genius” tech founders make these hyperreal futures harder to resist and harder to challenge.

My provisional conclusions

The conversation is far from over. But it has already demonstrated one of the ways in which AI can help us refine our understanding of the world. It comes from a simple fact: AI knows has much more factual knowledge of history than we do. But to turn that knowledge into understanding requires the kind of dialogue we have been developing.

We should begin by admitting that the culture spread in the Industrial and Post-Industrial Age has been singularly neglectful of history. Today’s educational systems focus on preparing youngsters to acquire skills believed to give them an advantage in the job market. This means that history is often presented as little more than an ill-defined area of general culture that we perceive as having little more than anecdotal value. As for the media, in its reporting on contemporary events, it treats context and the examination of interrelated causes and effects in the stream of events as a time-wasting distraction at best.

ChatGPT has the leisure to do the opposite, as it did in this conversation. It reminded me that history could provide insight into current objects of debate. In so doing, it helped me to understand the historical, ideological and economic logic of Altman’s discourse. It notably provided a dimension of historical accounting that rarely appears in our curricula: strategic analysis. For each example of precursors to contemporary hyperreality, it identified what it calls the strategic “function.” Here are the five functions it highlighted:

- The images and stories weren’t just decoration — they were tools of recruitment, investment and moral justification for an empire.

- The exhibits served corporate agendas (e.g., car-centric infrastructure) by shaping public desire for a future aligned with specific industrial plans.

- Selling a national myth of “progress” and “frontier spirit” that justified massive military-tech budgets.

- Attracted massive capital and public goodwill toward deregulated tech growth.

Shapes public perception so that AI development appears inherently benevolent and urgent.

Once we acknowledge these factors of personal, institutional and financial motivation, we can begin to think not just about what these phenomena mean historically, but how they help us to understand with regard to the world we live in today. There is an obvious good side to encouraging investment, recruitment and getting people to accept a nation’s industrial plans. But there is also an objectively bad side when, for example, the goal is to justify “massive military-tech budgets” for the military-industrial complex, the very issue US President Dwight D. Eisenhower warned about more than 60 years ago.

In Part 3 of this ongoing learning experience with ChatGPT, we will look more closely at what the chatbot itself calls “deeper cultural, economic, and psychological dynamics.”

Your thoughts

Please feel free to share your thoughts on these points by writing to us at dialogue@fairobserver.com. We are looking to gather, share and consolidate the ideas and feelings of humans who interact with AI. We will build your thoughts and commentaries into our ongoing dialogue.

[Artificial Intelligence is rapidly becoming a feature of everyone’s daily life. We unconsciously perceive it either as a friend or foe, a helper or destroyer. At Fair Observer, we see it as a tool of creativity, capable of revealing the complex relationship between humans and machines.]

[Lee Thompson-Kolar edited this piece.]

The views expressed in this article are the author’s own and do not necessarily reflect Fair Observer’s editorial policy.

Support Fair Observer

We rely on your support for our independence, diversity and quality.

For more than 10 years, Fair Observer has been free, fair and independent. No billionaire owns us, no advertisers control us. We are a reader-supported nonprofit. Unlike many other publications, we keep our content free for readers regardless of where they live or whether they can afford to pay. We have no paywalls and no ads.

In the post-truth era of fake news, echo chambers and filter bubbles, we publish a plurality of perspectives from around the world. Anyone can publish with us, but everyone goes through a rigorous editorial process. So, you get fact-checked, well-reasoned content instead of noise.

We publish 3,000+ voices from 90+ countries. We also conduct education and training programs

on subjects ranging from digital media and journalism to writing and critical thinking. This

doesn’t come cheap. Servers, editors, trainers and web developers cost

money.

Please consider supporting us on a regular basis as a recurring donor or a

sustaining member.

Will you support FO’s journalism?

We rely on your support for our independence, diversity and quality.

Comment