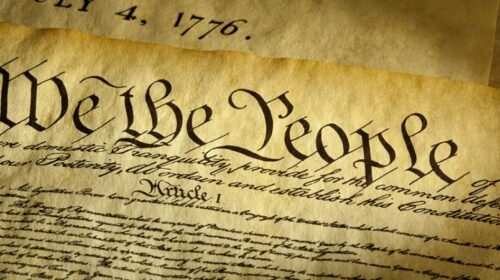

Even Now Donald Trump is a Danger to American Democracy

Donald Trump is currently facing 34 charges of tax and accounting fraud in a New York trial. It is the first time that an American president has faced criminal charges. The United States now joins a number of democratic countries...